Just another one of my random thoughts I have been having recently.

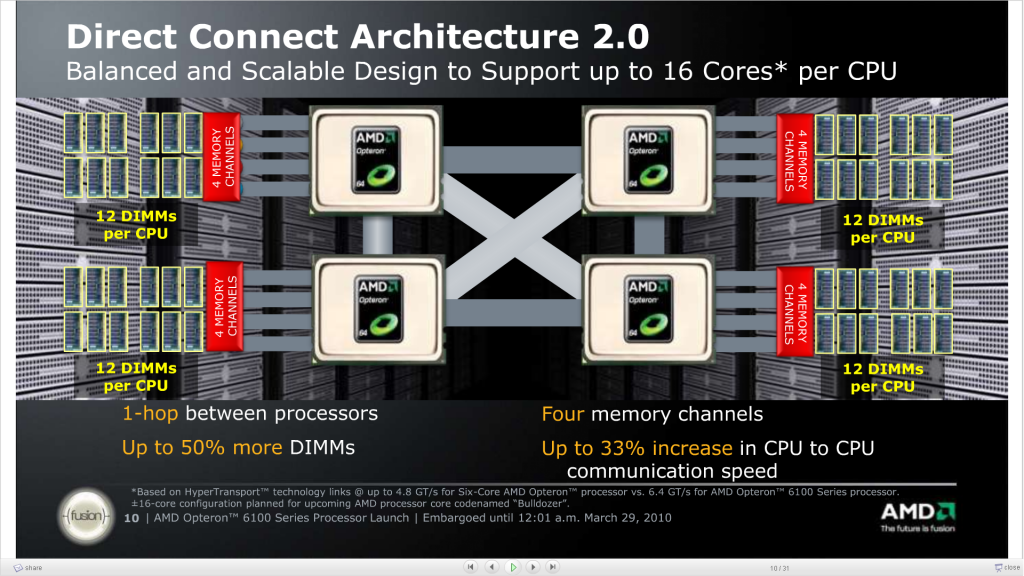

Chuck wrote a blog not too long ago how he believes everyone is going to go to Intel (or x86 at least) processors in their systems and move away from ASICs.

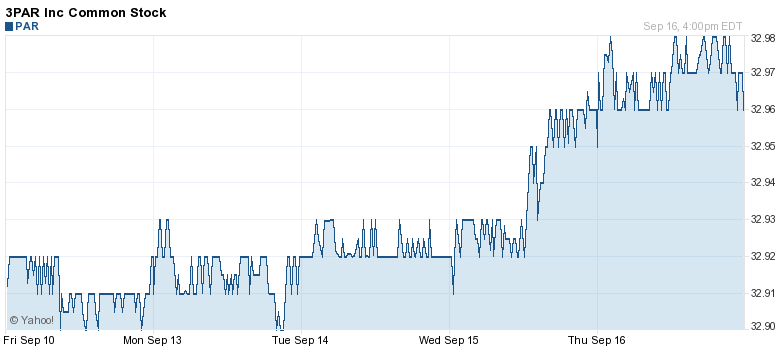

He illustrated his point by saying some recent SPEC NFS results showed the Intel based system outperforming everything else. The results were impressive, the only flaw in them is that the costs are not disclosed for SPEC. An EMC VMAX with 96 EFDs isn’t cheap. And the better your disk subsystem is the faster your front end can be.

Back when Exanet was still around they showed me some results from one of their customers testing SPEC SFS on the Exanet LSI (IBM OEM’d) back end storage vs 3PAR storage, and for the same number of disks the SPEC SFS results were twice as high on 3PAR.

But that’s not my point here or question. A couple of years ago NetApp posted some pretty dismal results for the CX-3 with snapshots enabled. EMC doesn’t do SPC-1 so NetApp did it for them. Interesting.

After writing up that Pillar article where I illustrated the massive efficiency gains on the 3PAR architecture(which is in part driven by their own custom ASICs), it got me thinking again, because as far as I can tell Pillar uses x86 CPUs.

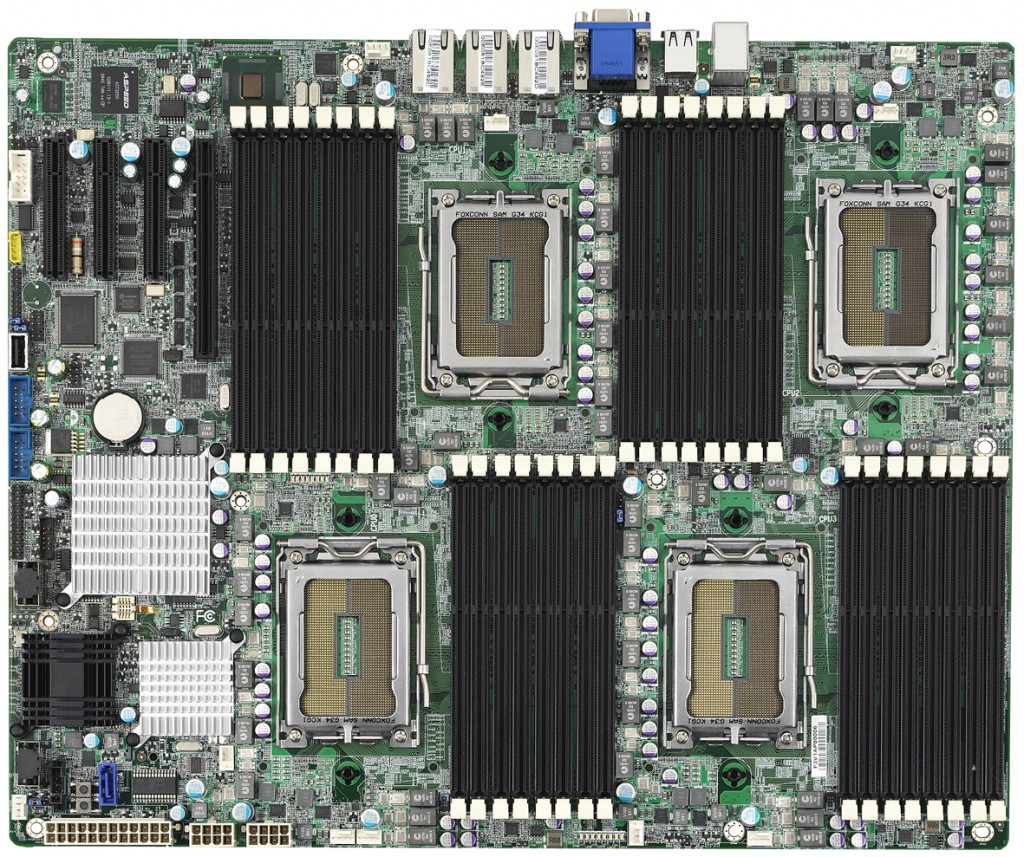

Pillar offers multiple series of storage controllers to best meet the needs of your business and applications. The Axiom 600 Series 1 has dual-core processors and supports up to 24GB cache. The Axiom 600 Slammer Series 2 has quad-core processors and double the cache providing an increase in IOPS and throughput over the Slammer Series 1.

Now I can only assume they are using x86 processors, for all I know I suppose they could be using Power, or SPARC, but I doubt they are using ARM 🙂

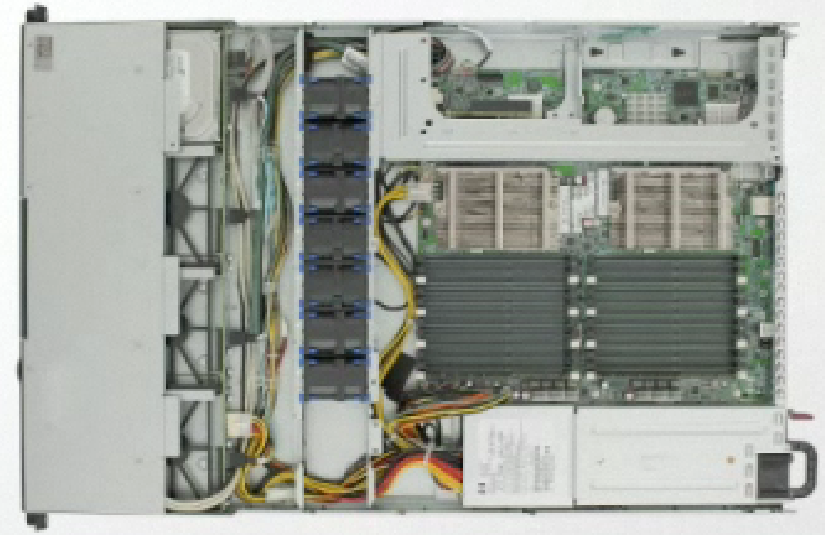

Anyways back to the 3PAR architecture and their micro RAID design. I have written in the past about how you can have tens to hundreds of thousands of mini RAID arrays on a 3PAR system depending on the amount of space that you have. This is, of course to maximize distribution of data over all resources to maximize performance and predictability. When running RAID 5 or RAID 6, there are of course parity calculations involved. I can’t help but wonder what sort of chances in hell a bunch of x86 CPU cores have in calculating RAID in real time for 100,000+ RAID arrays, with 3 and 4TB drives not too far out, you can take that 100,000+ and make it 500,000.

Taking the 3PAR F400 SPC-1 results as an example, here is my estimate on the number of RAID arrays on the system, fortunately it’s mirrored so math is easier:

- Usable capacity = 27,053 GB (27,702,272 MB)

- Chunklet size = 256MB

- Total Number of RAID-1 arrays = ~ 108,212

- Total Number of data chunklets = ~216,424

- Number of data chunklets per disk = ~563

- Total data size per disk = ~144,128 MB (140.75 GB)

For legacy RAID designs it’s probably not a big deal, but as disk drives grow ever bigger I have no doubt that everyone will have to move to a distributed RAID architecture, to reduce disk rebuild times and lower the chances of a double/triple disk failure wiping out your data. It is unfortunate (for them) that Hitachi could not pull that off in their latest system.

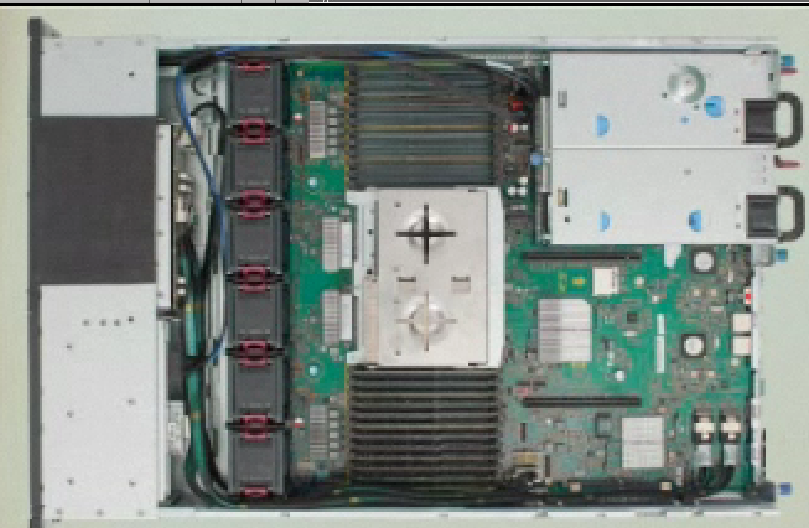

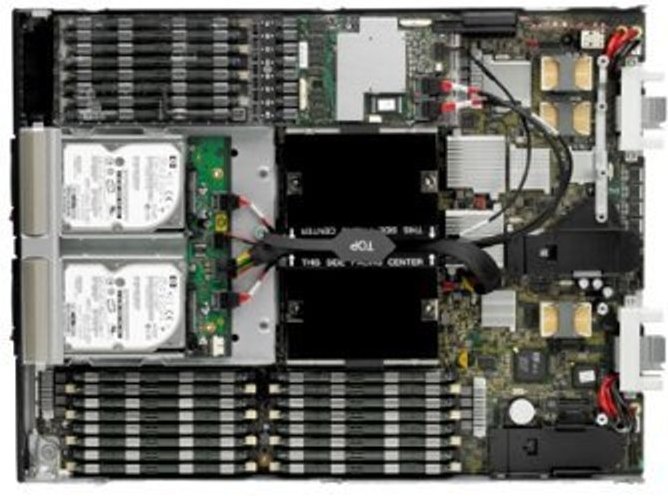

3PAR does use Intel CPUs in their systems as well, though they aren’t used too heavily, on the systems I have had even at peak spindle load I never really saw CPU usage above 10%.

I think ASICs are here to stay for some time, on the low end you will be able to get by with generic CPU stuff, but on the higher end it will be worth the investment to do it in silicon.

Another place to look for generic CPUs vs ASICs is in the networking space. Network devices are still heavily dominated by ASICs because generic CPUs just can’t keep up. Now of course generic CPUs are used for what I guess could be called “control data”, but the heavy lifting is done by silicon. ASICs often draw a fraction of the power that generic CPUs do.

Yet another place to look for generic CPUs vs ASICs is in the HPC space – the rise of GPU-assisted HPC allowing them to scale to what was (to me anyways) unimaginable heights.

Generic CPUs are of course great to have and they have come a long way, but there is a lot of cruft in them, so it all depends on what your trying to accomplish.

The fastest NAS in the world is still BlueArc, which is powered by FPGAs, though their early cost structures put them out of reach for most folks, their new mid range looks nice, my only long time complaint about them has been their back end storage – either LSI or HDS, take it or leave it. So I leave it.

The only SPEC SFS results posted by BlueArc are for the mid range, nothing for their high end (which they tested on the last version of SFS, nothing yet for the current version).