[(More) Minor updates since original post] I don’t think I’ll ever work for a company that is in a position to need to leverage something like a VSP, but that doesn’t stop me from being interested as to how it performs. After all it has been almost four years since Hitachi released results for their USP-V, which had, at the time very impressive numbers(record breaking even, only to be dethroned by the 3PAR T800 in 2008) from a performance perspective(not so much from a cost perspective not surprisingly).

So naturally, ever since Hitachi released their VSP (which is OEM’d by HP as their P9500)about a year ago I have been very curious as to how well it performs, it certainly sounded like a very impressive system on paper with regards to performance. After all it can scale to 2,000 disks and a full terabyte of cache. I read an interesting white paper (I guess you could call it that) recently on the VSP out of curiosity.

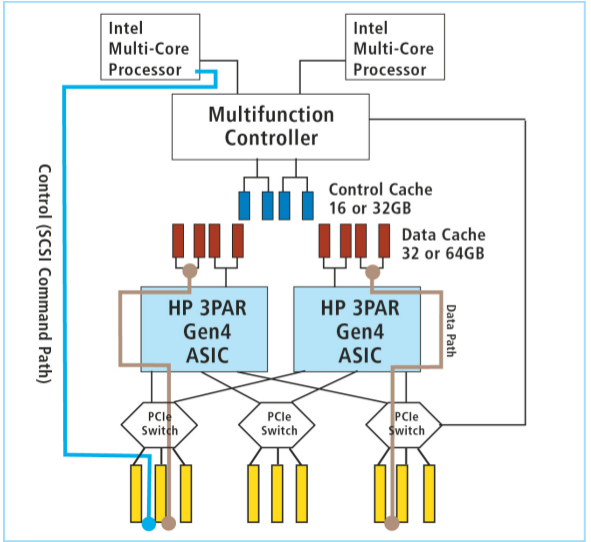

One of the things that sort of stood out is the claim of being purpose built, they’re still using a lot of custom components and monolithic architecture on the system, vs most of the rest of the industry which is trying to be more of a hybrid of modular commodity and purpose built, EMC VMAX is a good example of this trend. The white paper, which was released about a year ago, even notes

There are many storage subsystems on the market but only few can be considered as real hi-end or tier-1. EMC announced its VMAX clustered subsystem based on clustered servers in April 2009, but it is often still offering its predecessor, the DMX, as the first choice. It is rare in the industry that a company does not start ramping the new product approximately half a year after launching. Why EMC is not pushing its newer product more than a year after its announcement remains a mystery. The VMAX scales up by adding disks (if there are empty slots in a module) or adding modules, the latter of which are significantly more expensive. EMC does not participate in SPC benchmarks.

The other interesting aspect of the VSP is it’s dual chassis design(each chassis in a separate rack), which, the white paper says is not a cluster, unlike a VMAX or a 3PAR system(3PAR isn’t even a cluster the way VMAX is a cluster intentionally with the belief the more isolated design would lead to higher availability). I would assume this is in response to earlier criticisms of the USP-V design in which the virtualization layer was not redundant(when I learned that I was honestly pretty shocked) – Hitachi later rectified the situation on the USP-V by adding some magic sauce that allowed you to link a pair of them together.

Anyways with all this fancy stuff, obviously I was pretty interested when I noticed they had released SPC-1 numbers for their VSP recently. Surely, customers don’t go out and buy a VSP because of the raw performance, but because they may need to leverage the virtualization layers to abstract other arrays, or perhaps the mainframe connectivity, or maybe they get a comfortable feeling knowing that Hitachi has a guarantee on the array where they will compensate you in the event there is data loss that is found to be the fault of the array (I believe the guarantee only covers data loss and not downtime, but am not completely sure). After all, it’s the only storage system on the planet that has such a stamp of approval from the manufacturer (earlier high end HDS systems had the same guarantee).

Whatever the reason, performance is still a factor given the amount of cache and the sheer number of drives the system supports.

One thing that is probably never a factor is ease of use – since the USP/VSP are complex beasts to manage, something your very likely need significant amounts of training. One story I remember being told is a local HDS rep in the Seattle area mentioned to a 3PAR customer “after a few short weeks of training you’ll feel as comfortable on our platform as you do on 3PAR”. Something like that, the customer said “you just made my point for me”. Something like that anyways.

Would the VSP dethrone the HP P10000 ? I found the timing of the release of the numbers an interesting coincidence after all, I mean the VSP is over a year old at this point, why release now ?

So I opened the PDF, and hesitated .. what would be the results? I do love 3PAR stuff and I still view them as somewhat of an underdog even though they are under the HP umbrella.

Wow, was I surprised at the results.

Not. Even. Close.

The VSP’s performance was not much better than that of the USP-V which was released more than four years ago. The performance itself is not bad but it really puts the 3PAR V800 performance in some perspective:

- VSP -Â Â ~270,000 IOPS @ $8.18/IOP

- V800 – ~450,000 IOPS @ $6.59/IOP

But it doesn’t stop there

- VSP -Â ~$45,600 per usable TB (39% unused storage ratio)

- V800 – ~$13,200 per usable TB (14% unused storage ratio)

Hitachi managed to squeeze in just below the limit for unused storage – which is not allowed to be above 45%, sounds kind of familiar. The VSP had only 17TB additional usable capacity than the original USP-V as tested. This really shows how revolutionary sub disk distributed RAID is for getting higher capacity utilization out of your system, and why I was quite disappointed when the VSP came out without anything resembling it.

Hitachi managed to improve their cost per IOP on the VSP vs the USP but their cost per usable TB has skyrocketed, about triple the price of the original USP-V results. I wonder why this is ? (I mis counted the digits in my original calculations, in fact the VSP is significantly cheaper than the USP when it was tested!) One big change in the VSP is the move to 2.5″ disk drives. The decision to use 2.5″ drives did kind of hamper results I believe since this is not a SPC-1/E Energy Efficiency test so power usage was never touted. But the largest 2.5″ 15k RPM drive that is available for this platform is 146GB(which is what was used).

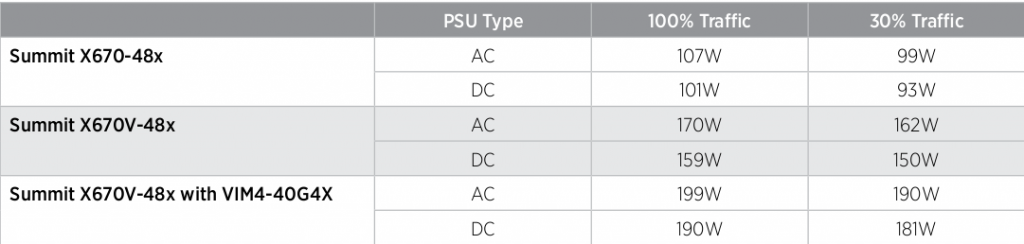

One of the customers(I presume) at the HP Storage presentation I was at recently was kind of dogging 3PAR saying the VSP needs less power per rack than the T or V class(which requires 4x208V 30A per fully loaded rack).

I presume it needs less power because of the 2.5″ drives, also overall the drive chassis on 3PAR boxes do draw quite a bit of power by themselves(200W empty on T/V, 150W empty on F – given the T/V hold 250% more drives/chassis it’s a pretty nice upgrade).

Though to me especially on a system like this, power usage is the last thing I would care about. The savings from disk space would pay for the next 10 years of power on the V800 vs the VSP.

My last big 3PAR array cut the number of spindles in half and the power usage in half vs the array it replaced, while giving us roughly 50% better performance at the same time and the same amount of usable storage. So knowing that, and the efficiency in general I’m much less concerned as to how much power a rack requires.

So Hitachi can get 384 x 2.5″ 15k RPM disks in a single rack, and draw less power than 3PAR can get with 320 disks in a single rack.

You could also think of it this way: Hitachi can get ~56TB RAW of 15k RPM space in a single rack, and 3PAR can get 192TB RAW of 15k RPM space in a single rack, nearly four times the RAW space for double the power(nearly five times the usable(4.72x precisely – V800 @ 80% utilization VSP @ 58%) space due to the architecture), for me that’s a good trade off, a no brainer really.

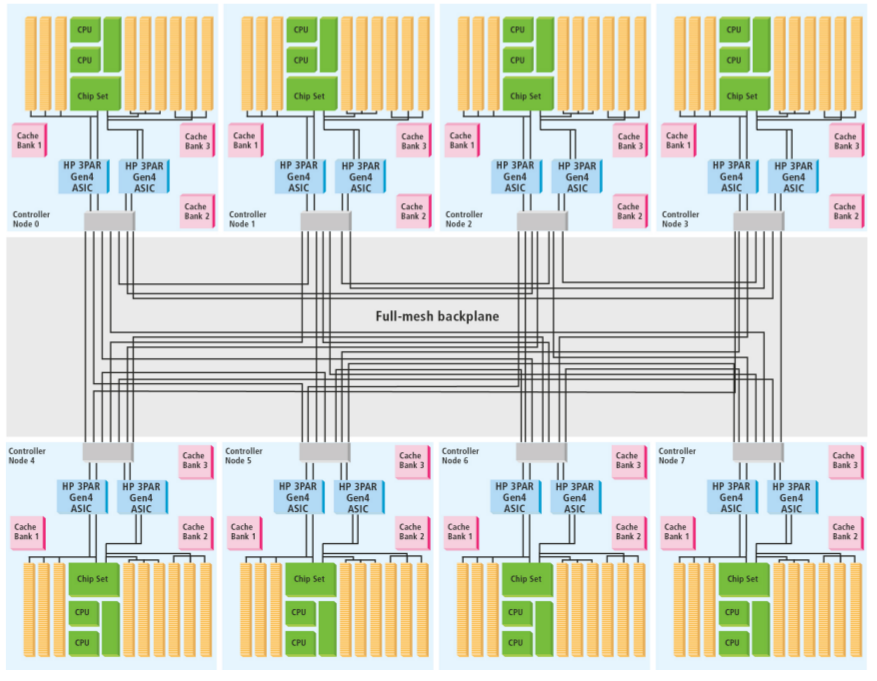

The things I am not clear on with regards to these results – is this the best the VSP can do? This does appear to be a dual chassis system. The VSP supports 2,000 spindles, the system tested only had 1,152, which is not much more than the 1,024 tested on the original USP-V. Also the VSP supports up to 1TB of cache however the system tested only had 512GB (3PAR V800 had 512GB of data cache too).

Maybe it is one of those situations where this is the most optimal bang for the buck on the platform, perhaps the controllers just don’t have enough horsepower to push 2,000 spindles at full tilt – I don’t know.

Hitachi may have purpose built hardware, but it doesn’t seem to do them a whole lot of good when it comes to raw performance. I don’t know about you but I’d honestly feel let down if I was an HDS customer. Where is the incentive to upgrade from USP-VÂ to VSP ? The cost for usable storage is far higher, the performance is not much better. Cost per IOP is less, but I suspect the USP-V at this stage of the game with more current pricing for disks and stuff would be even more competitive than VSP. (correction from above, due to my mis-reading of the digits ($135k/TB instead of $13.5k/TB VSP is in fact cheaper making it a good upgrade from USP from that perspective! Sorry for the error 🙂 )

Maybe it’s in the software – I’ve of course never used a USP/VSP system but perhaps the VSP has newer software that is somehow not backwards compatible with the USP, though I would not expect this to be the case.

Complexity is still high, the configuration of the array stretches from page 67 of the full disclosure documentation to page 100. 3PAR’s configuration by contrast is roughly a single page.

Why do you think someone would want to upgrade from a USP-V to a VSP?

I still look forward to the day when the likes of EMC make their VMAX architecture across their entire product line, and HDS also unifies their architecture. I don’t know if it’ll ever happen but it should be possible at least with VMAX given their sound thumping of the Intel CPU drum set.

The HDS AMS 2000 series of products was by no means compelling either, when you could get higher availability(by means of persistent cache with 4 controllers), three times the amount of usable storage, and about the same performance on a 3PAR F400 for about the same amount of money.

Come on EMC, show us some VMAX SPC-1 love, you are, after all a member of SPC now (though kind of odd your logo doesn’t show up, just the text of the company name).

One thing that has me wondering on this VSP configuration – with such little disk space available on the system I have to wonder why anyone would bother with such a configuration with spinning rust on this platform for performance and just go SSD instead. Well one reason may be a 400GB SSD would run $35-40,000 (after 50% discount, assuming that is list price), ouch. 220 of those (system maximum is 256) would net you roughly 88TB raw (maybe 40TB usable), but cost $7.7M for the SSDs alone.

On the V800 side a 4-pack of 200GB SSDs will cost you roughly $25,000 after discount(assuming that is list price). Fully loading a V800 with the maximum of 384 SSDs(77TB raw) would cost roughly $2.4M for the SSDs alone(and still consume 7 racks) I think I like the 3PAR SSD strategy more — not that I would ever do such a configuration!

Space would be more of course if the SSD tests used RAID 5 instead of RAID 1, I just used RAID 1 for simplicity.

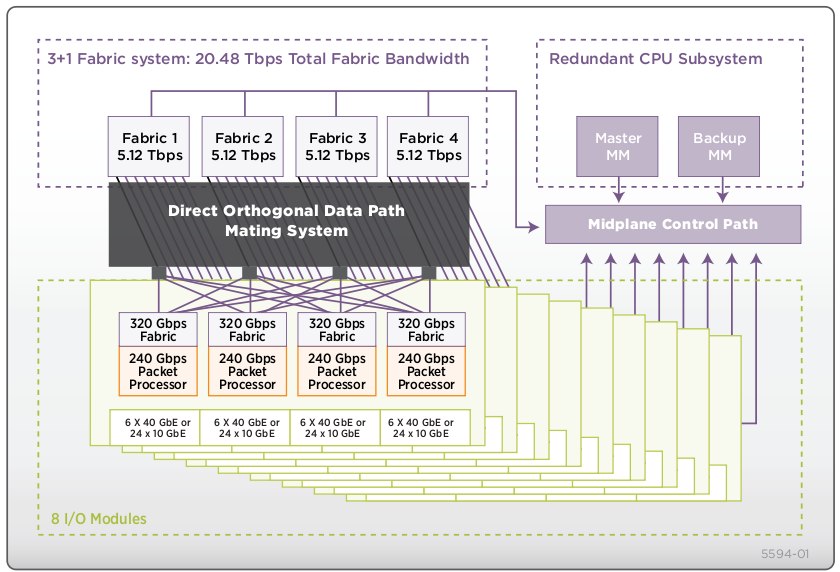

Goes to show that these big boxes have a long way to go before they can truly leverage the raw performance of SSD. with 200-300 SSDs in either of these boxes the controllers would be maxed out and the SSDs would probably for the most part be idle. I’ve been saying for a while how stupid it seems for such a big storage system to be overwhelmed so completely by SSDs and that the storage systems need to be an order of magnitude(or more) faster. 3PAR did a good start with doubling of performance, but I’m thinking the boxes need to do at least 1M IOPS to disk, if not 2M, for systems such as these — how long will it take to get there?

Maybe HDS could show better results with 900GB 10k RPM disks(and put something like 1700 disks instead of 1,152 instead of the 146GB 15k RPM disks, should hopefully show much lower per usable TB costs, though their IOPS cost would probably shoot up. Though from what I can see, 600GB is the max supported 2.5″ 10k RPM disk supported. 900GB @ 58% utilization would yield about 522GB, and 600GB on 3PAR @ 80% utilization would yield about 480GB.

I suspect they could get their costs down significantly more if they went old school and supported larger 3.5″ 15k RPM disks and used those instead(the VSP supports 3.5″ disks just nothing other than 2TB Nearline (7200 RPM) and 400GB Flash – a curious decision). If you were a customer isn’t that something you’d be interested in ? Though another compromise you make with 3.5″ disks on the VSP is your then limited to 1,280 spindles, rather than 2,048 with 2.5″. Though this could be a side effect of a maximum addressable capacity of 2.5PB which is reached with 1,280 2TB disks, they very well could probably support 2,048 3.5″ disks if they could fit in the 2.5PB limit.

Their documentation says actual maximum usable with Nearline 2TB disks is 1.256PB (with RAID 10). With 58% capacity utilization that 1.25PB drops dramatically to 742TB. With RAID 5 (7+1) they say 2.19PB usable, let’s take that 58% number again 1.2PB @ 58% capacity utilization.

V800 by contrast would top out at 640TB with RAID 10 @ 80% utilization (stupid raw capacity limits!! *shakes fist at sky*), or somewhere in the 880TB-1.04PB (@80%) range with RAID 5 (depending on data/parity ratio).

Another strange decision on both HDS and 3PAR’s part was to only certify the 2TB disk on their platform, and not anything smaller. Since both systems become tapped out at roughly half the number of supported spindles when using 2TB disks due to capacity limits.

An even more creative solution(to work around the limitation!!), which I doubt is possible is somehow restrict the addressable capacity of each disk to 1/2 the size, so you could in effect get 1,600 2TB disks in each with 1TB of capacity, then when they release software updates to scale the box to even higher levels of capacity (32GB of control cache can EASILY handle more capacity) just unlock the drives and get that extra capacity free. That would be nice at least, probably won’t happen though. I bet it’s technically possible on the 3PAR platform due to their chunklets. 3PAR in general doesn’t seem as interested in creative solutions as I am 🙂 (I’m sure HDS is even more rigid)

Bottom Line

So HDS has managed to get their cost for usable space down quite a bit, from $135,000/TB to around $45,000/TB, and improve performance by about 25%

They still have a long ways to go in the areas of efficiency, performance, scalability, simplicity and unified architecture. I’m sure there will be plenty of customers out there that don’t care about those things(or are just not completely informed) and will continue to buy VSP for other reasons, it’s still a solid platform if your willing to make those trade offs.